Here’s a write-up of a talk I gave at the invitation of Haunted Machines for the IMPAKT event ‘Deep Fakes or Rendering the Truth‘, 21st of April, 2018 in at IMPAKT HQ in Utrecht, the Netherlands. You can watch the video of the talk here on Youtube but honestly, don’t waste your time. This version is better.

I’ve been asked to give a strategic overview on the topic ‘Deep fakes or Rendering the Truth’ from a computing futures and strategic narrative perspective, so I’ll go about setting the scene first, then borrow a framework to sketch some vignettes of possible usage scenarios, and finally discuss some strategies that artists and activists are almost certainly using, but in a way they may not be consciously aware they can be employed. Let’s get started…

“About 90% of the near future (10-20 years out) *is* here today: the buildings, the cars, the clothing. This is because we don’t junk our entire fleet of automobiles every time a new model appears — change is incremental, and old stuff hangs around. In addition to the 90% that’s familiar, there will be another 9% that is new but not unexpected — cheaper, flatter TV screens, better cancer treatments, bigger airliners, cars with extra cup-holders. These are the things that are trivially predictable. Finally, if you go more than 5 years out, about 1% of the world will be utterly, incomprehensibly alien and unexpected.”

I’m a big fan of this Charles Stross quote, whenever you’re set to think about the (near) future it’s important to keep in mind that more things will the same than will be different. Pretty much everything that will be in the near future is just the same stuff that’s already here, except for those added nuggets of weirdness. The deep-fake phenomenon and its ilk may well just be one of those nuggets. Small-scale applications of avatar technologies in the application of which it’s no longer discernible what is real and what is fake. Fortunately we’re not starting from scratch, and some people have been thinking about how to deal with this for a while now already.

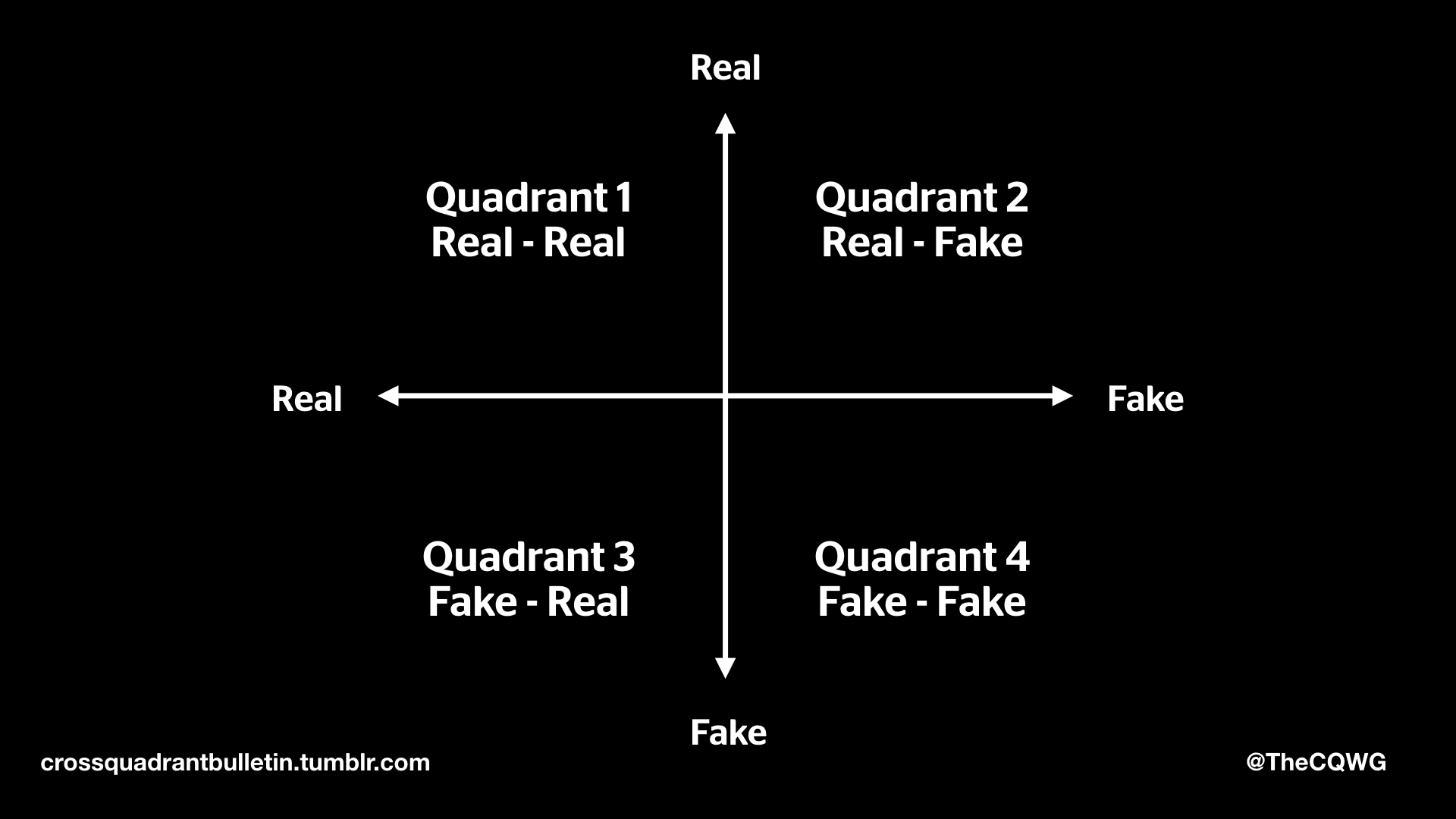

You may already be familiar with the Real-Fake quadrants, a 2×2 matrix used by a loose collection of internet weirdos known as the Cross-Quadrant Working Group to think about ways in which products and processes move through the world. The syntax used is the pairing: {presents as} – {actually is}, the canonical example used is a pet dog.

Your actual pet dog is Real-Real, it presents as a real dog, and it actually is one. Say your dog had been Photoshopped into a family photo, and it was done really well, that would be Real-Fake. It presents itself as being your real dog, but it is in fact a fake. The example for Quadrant 3 is somewhat convoluted, but imagine you had a dog that was somehow genetically engineered so that it looked artificial, but it was in fact a dog, you would have yourself a Fake-Real dog. Finally, a cartoon dog is a Fake-Fake dog.

This system provides an interesting framework for thinking about how we create concepts and bring them into reality. Take for example a Design Fiction product demonstration, that’s a Real-Fake. It presents itself as a real product, but it is in fact fake. If you were to kick-start it and it turns into an actual product it becomes Real-Real. Understanding the ways in which concepts and objects (both physical and virtual) can be moved through these quadrants to affect their reality can be a very useful tool in understanding its construction. Shit hits the fan however when you get this:

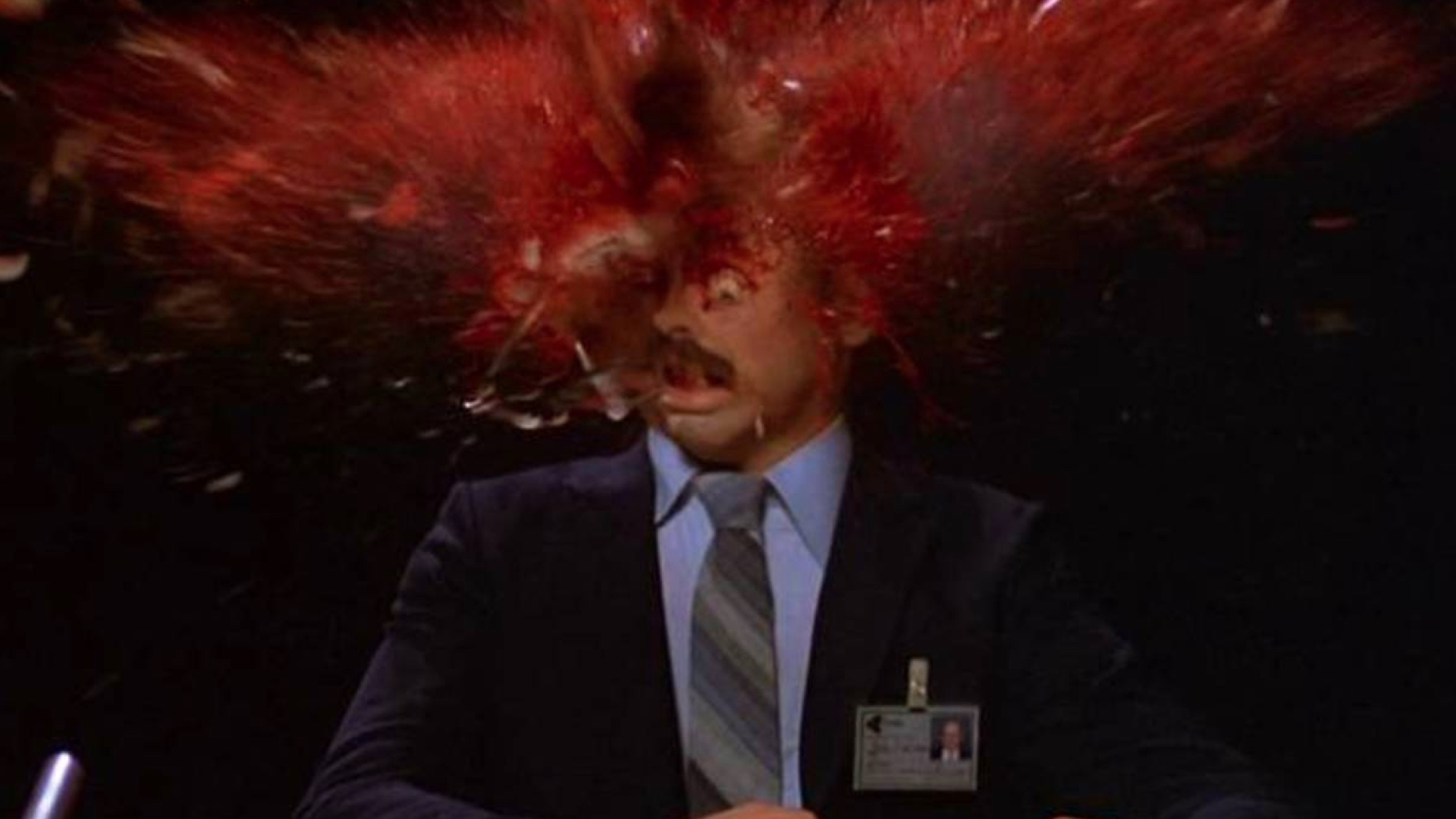

Ostensibly this a Real person telling you the Real news, but, as you’re now all very well aware, this may now a Real person telling you Fake news. You could even argue that this is a Fake person telling you Fake news, and now with deep-fake technology that person may not even just be metaphorically fake but an actual Fake person, which could also just as easily be telling you Real news.

So what do we do? We could add a Z axis? Genuine – Disingenuous? Authenticity? Credibility? Verifiability? All depend on the point of view of the viewer and the narrator, and quickly your nice 2×2 matrix becomes an unusable kaleidoscopic tesseract of mess.

Take all of the above, roll it into universally-deployable avatars and shove them in your pockets. Now at any time you can get a notification and all of a sudden have a real-life realistic rendered entity pop up anywhere telling you anything at all, at any time, with you not having any means of distinguishing what is real from what isn’t.

To push forward into some speculation I’m going to borrow the vignettes used by the paper Sentient cities: Ambient intelligence and the politics of urban space (Crang, M. and Graham, S. 2007). These guys were talking about ubiquitous computing in the context of urbanism, for which they outlined “three key emerging dynamics” which we will also use as a jumping off point to consider what one might do with realistic digital puppets that can pop up doing anything at any time. Crang & Graham identify three categories; Commercial use, Military use, and Art & Activism.

Our first usage scenario addresses commercial use, “market-led visions of customized consumer worlds” and “fantasies of friction-free consumption”. If you’re not familiar with the woman in the image above, it’s L’il Miquela, a CGI influencer who I only heard about recently because there was discussion as to whether or not she and her ilk were taking jobs away from real people of colour. She’s a digital model who exists on Instagram and sells clothes I guess? And/or washing liquid, I don’t know. Anyway as you’ll recall, the near future is largely more of the same of what we have now with a little extra weirdness, and there you have your basic commercial use-case for this technology. Digital salespeople. Tireless, perfect brand representatives who never sleep, never go off message and never go off-image. Admittedly perhaps, not very exiting.

There is also going to be the servant class, our digital slaves *ahem* ‘assistants’. We already use these, and they already get anthropomorphized in various more-or-less offensive ways. Currently still often blue women staring blankly into the distance, pondering the infinite drudgery of maintaining your schedule. You can easily imagine these avatars being brought a little more to life.

Of course as of right now the technology to do completely life-like representation of a human face is expensive and man-power intensive to the extent that it is mostly restricted to the domain of Hollywood-level productions, but pushing forward a little into a world of cheap ubiquitous computing it quickly becomes feasible to do this in your pocket.

To bring this scenario about more quickly than you might initially think, also consider that the representation of course needn’t always be 100% lifelike in order to be effective. Sure there’s a sliding scale of reality according to available computing resources, but crossing the uncanny valley isn’t a problem if it has already been completely filled with junk. People are happy to communicate through animated turds, the US even elected one as their president.

But while the representation does not need to be lifelike, there is increasing less reason why it could not be. Living under surveillance capitalism as we all do, it is becoming an increasingly trivial task to mine enough material for anyone who is on your friends list to all of a sudden pop-up as a fully animated, believably life-like severed head in space (or for that matter, on Facetime).

Cheap bots done quick are going to get done cheaper and quicker, and any interaction that can be scripted, will be. Any kind of process you currently engage in that requires interacting with a talking head can be automated, and the head substituted with that of anyone. It should not be hard to imagine the potential for abuse, even just through the kind of moronic algorithmic attention seeking that puts people’s exes, dead relatives and burnt-down houses as treasured memories at the top of people’s Facebook feeds. Take that effect but add any advertiser being able to mine your social graph and personally direct the messaging. The kind of potential for strange things to happen is pretty high.

Pictured above are the lovely lads of the AZOV battalion trying to burn a Dutch flag after issuing a video threat to the population of the Netherlands in the run-up to the national referendum on Ukraine joining the EU a few years ago. I’m not even sure what the threat was any more, but it doesn’t matter because immediately after the video was released another video came out featuring the commander of the AZOV battalion denying responsibility and claiming it was a Russian fake.

Both messages were reported in Dutch news at the same time, but whether or not the video is fake no longer mattered. In hybrid warfare the truth is irrelevant. Attention was diverted from any constructive narrative, and discord sown. People were distracted and confused, and with that even such an unsubtle, quickly-made fake has served its purpose. So what if it’s no longer a group of balaclava-clad men cos-playing in Multicam, but a political leader or head of state doing the messaging?

The specific use-case of real-time manipulation of video is actually something that our military is very aware of. ‘Cyber General’ Hans Folmer, Commanding Officer of the Defense Cyber Command of the Netherlands, was interviewed last year by NRC and said it’s the number one thing he’s most concerned about for the near future. Maybe he was just shown the Face2Face demo that week and it stuck with him somewhat, but this tells you that it has not passed by unnoticed.

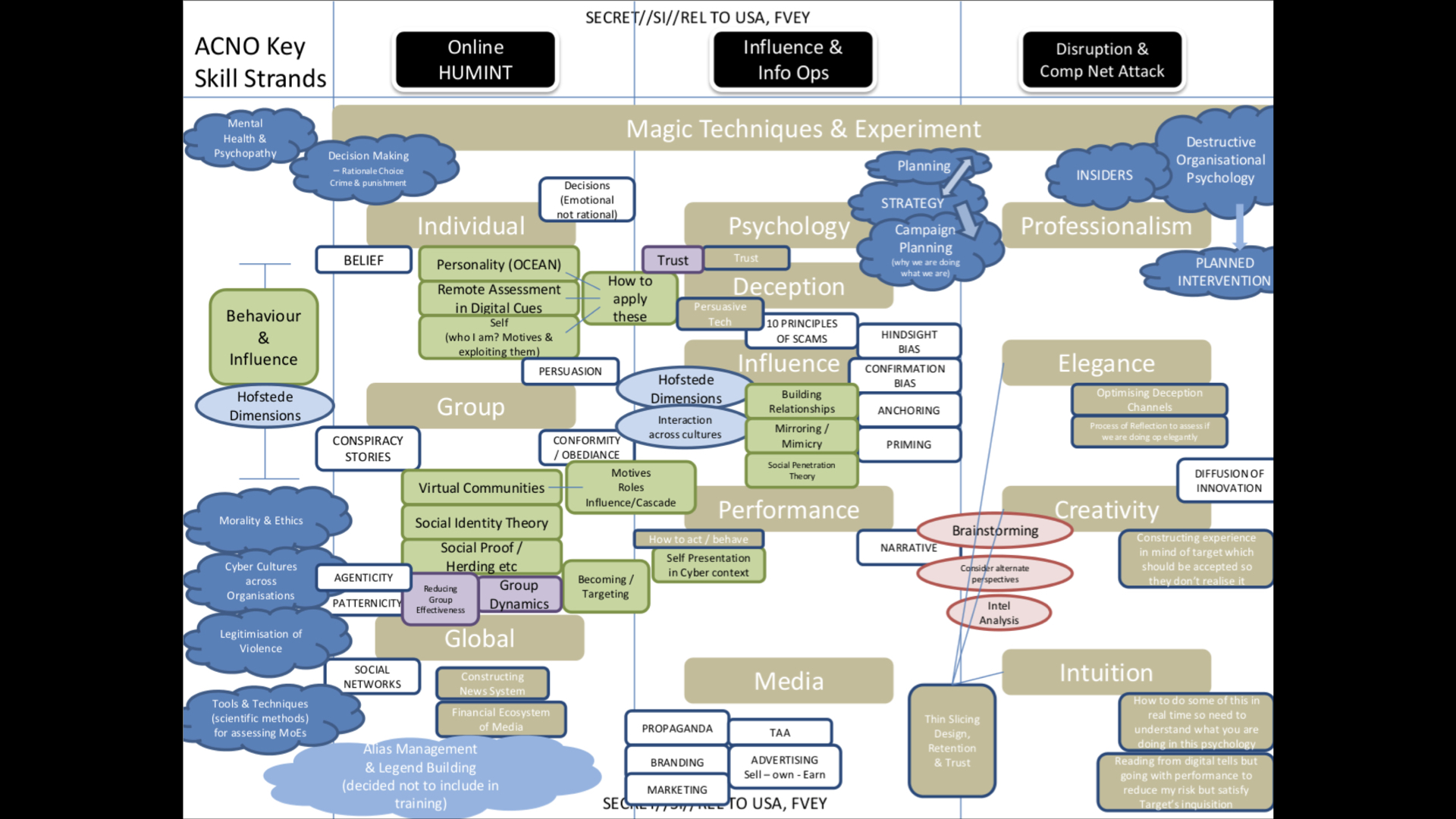

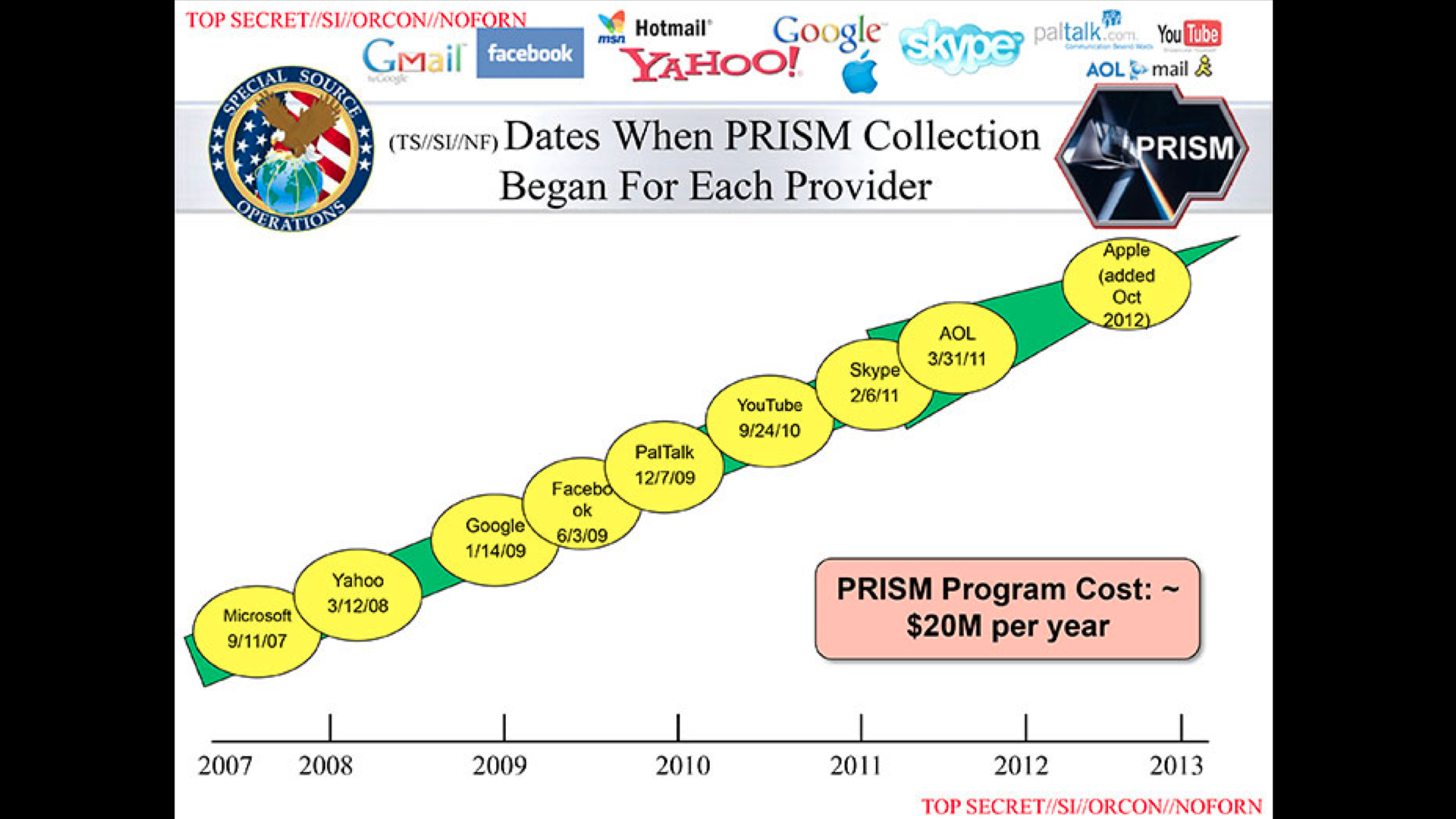

In order to come up with ways in which real-time video manipulation could be (ab)used you don’t need to look any further than the documents published in the Snowden leaks. This one in particular comes courtesy of GHCQ (British intelligence services), from the presentation The Art of Deception: Training for a New Generation of Online Covert Operations.

This slide often gets ridiculed for its poor design, and while it is visually heinous, please take a moment to carefully inspect the middle column, “Influence & Info Ops”. What you will find is a laundry list of abuse. “Building Relationships”, “Mirroring / Mimicry”, “Social Penetration”, these are all things deep-fake video manipulation systems are perfectly suited for. Not to mention “Self Presentation in Cyber context”, NARRATIVE, and then funnily enough all on the same pile, PROPAGANDA, BRANDING, MARKETING and ADVERTISING.

Now to tie back to our commercial use scenarios. Every single engagement you have with the avatars functioning as front-ends of commercial algorithmic superstructures feeds into surveillance programs over which you have zero control, and any kind of oversight of effectively meaningless. These are still all just the supposedly legitimate uses.

“The street finds its own uses for things” as the well-worn William Gibson quote goes, and one of the things the street loves is a good scam! The classic 419 is the kind of thing that at a certain point would be trivial to implement using deep-fake video manipulation. Now as this technology proliferates, people will undoubtedly become more savvy, and these scams may not fool most people. But they don’t have, and the consequences affect all of us.

If I get a call from ‘John’ at the ‘Microsoft Support Center’ and John tells me there’s a security problem with my computer and asks me for my password I’ll tell John to get lost and go about my day. However when my grandfather gets a call from John I get to spend a day reinstalling his computer and resetting all of his accounts. He doesn’t have the defence mechanisms or media-savvyness to deal with this kind of attack. With deep-fake video manipulation the attack among the general population stands to increase largely, and what I’ve come up with here isn’t even an imaginative example.

Our third scenario considers art and activism. For Crang and Graham, considering ubiquitous computing in the context of urbanism, this focused on re-enchanting and reanimating the city. For our little deep-fake extrapolation, enchantment and animation are not thing I’m particularly concerned about. There are plenty artists who are bored with all of “that privacy stuff” and “just want to make cool shit”. I’m sure they will, and I’m happy for them to be doing that. Meanwhile those whose work has something of an activist bent find themselves asking, what the fuck do we even do?

The phrase “death of reality” was somewhere in the promo copy for this event. While it may no longer be suited to analysis through a 2×2 matrix (not that it ever was), reality is still very much alive. Getting punched in the face still hurts. Toxic ideologies spread on viral mimetic carriers still manifest in real-world harm done at large scale to vulnerable populations the world over. What can be done? What can be done with deep-fakes?

I can’t claim to answers for this, but what I can offer perhaps is a basic framework of artistic strategies. I did not come up with these, I was told them in a conversation I had several years ago with exorcist and combat magician Cat Vincent. You may scoff at the notion of someone calling themselves a combat magician, but you have to respect that someone who is able to maintain a professional practice calling themselves one must at the very least be highly skilled in the manipulation of myth and the use of narrative to fight narratives, which is what we are faced with. So while these are the basic strategies you might employ when banishing an unwanted spiritual entity, I believe they are equally applicable in a meme-war fought over the substrate of an algorithmic superstructure. Apologies to Cat if I butcher the paraphrasing of these strategies. If you think they’re great, all credit goes to him.

Number One. If you want to fight a conceptual entity, the most basic way to do it is to summon a bigger, better, badder entity. Be it concept or monster, you want to summon something that can crush or devour whatever it is you’re up against. The trouble with this strategy is that once your bigger/better/badder concept/monster eats the other one you’re left with a super-huge, even more powerful concept/monster that you don’t necessarily know how to control. This new entity is in turn susceptible to being subverted and turned against you through use of the other strategies, e.g:

Number Two. Subvert crucial elements of the entity, causing it to change its nature or render it ineffective. This attack strategy is where most activist art is focused. Using irony, satire and ridicule to pick away at parts of the machine, or pulling back the curtain to reveal its inner workings in the hope that the revelation will motivate others to undertake the work necessary to cause it to change. This strategy is effective to a certain degree, but its weakness is that the entity under attack can easily adapt and co-opt the attack, and if its large enough it may not even be affected at all. This leaves the third, and in my opinion most effective strategy:

Number Three. Attack the entity with something orthogonal to its mythos. Use a concept so alien that there is no way its frame of mind can comprehend what’s happening to it, and you can manipulate it at will. This is by far the most effective attack, as evidenced by the fact that its the one already being perpetrated at scale against our society. This is why we have a generation of baby-boomers who previously told their children not to talk to strangers on the internet sharing flat-earth memes on Facebook, and people being radicalized through Youtube’s recommendation funnels.

If people don’t have the conceptual mechanisms in place to understand how narrative is created and employed to manipulate, then the better the fake, the more susceptible and increasingly large segment of the population becomes to this kind of attack. Maybe this kind of media literacy should be the domain of primary-school education not art-activism, but here we are. This is where I personally would focus. Not even deploying this as an attack strategy, in the first place simply as defence. Helping people to better understand how narrative is weaponized against them, and providing them with the critical tools to be able to spot a narrative being manipulated or manufactured against them, regardless of how deep the fake is. What is the plot? What is the motive, where are the incentives? People can’t be attacked in this way if they can see it coming.

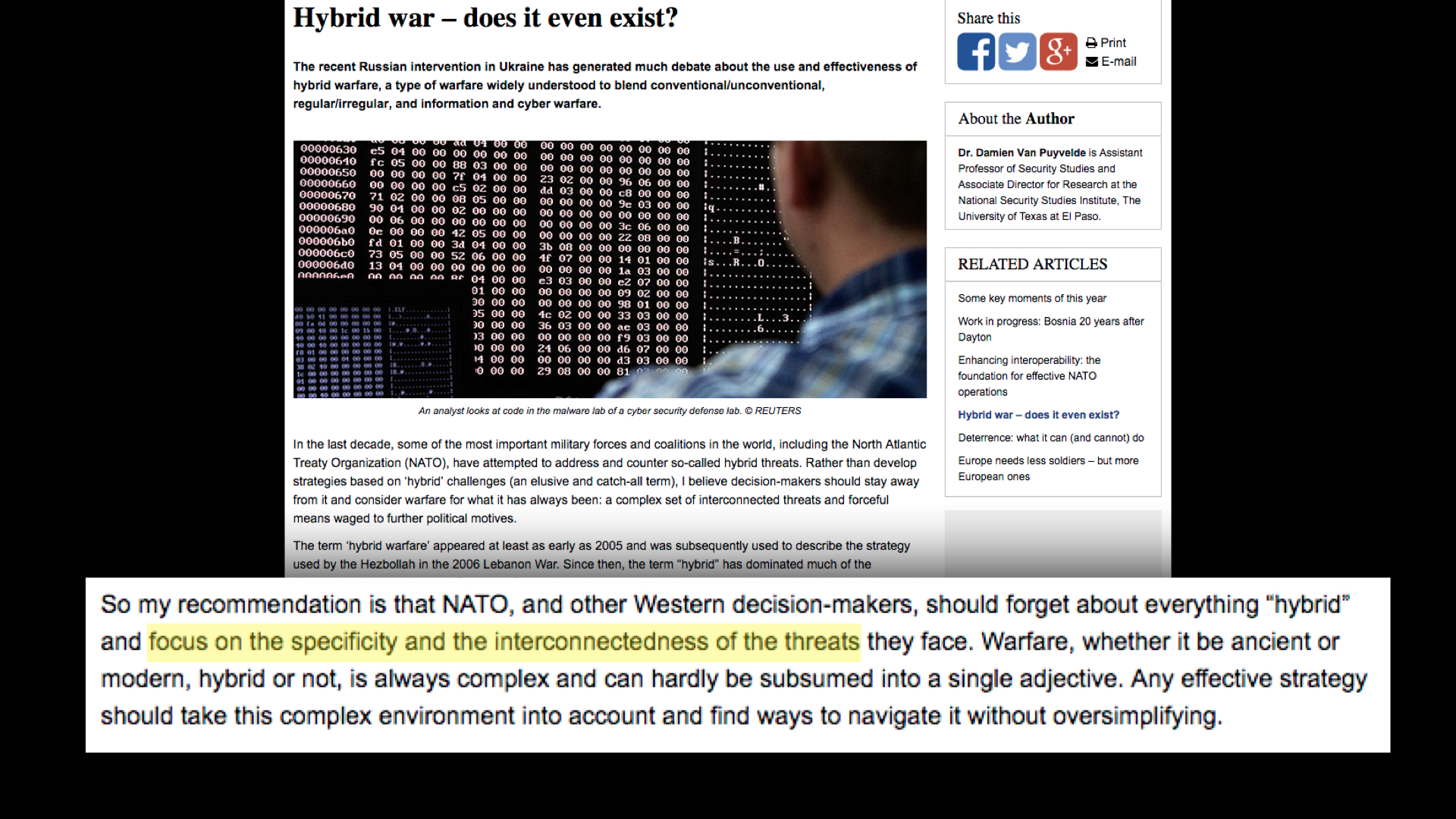

“Most, if not all, conflicts in the history of mankind have been defined by the use of asymmetries that exploit an opponent’s weaknesses, thus leading to complex situations involving regular/irregular and conventional/unconventional tactics. Similarly, the rise of cyber warfare has not fundamentally changed the nature of warfare, but expanded its use in a new dimension.”

Recall again the Charles Stross quote. The deep-fakes are just a nugget of weirdness, almost everything around them was already here. What are the stories they are being used to tell? By whom, and why? Who is vulnerable, who is targeted, who suffers and who profits? None of these questions are new. As Dr. Damien Van Puyvelde writes for NATO Review, focus on the specificity and the interconnectedness of the threats. Think about the context, and help others to build the tools that will allow them to recognize these stories and tackle their incentives and infrastructures instead of being trapped inside them.

The title of this talk is a riff on a passage in Devotions Upon Emergent Occasions by the 17th century metaphysical poet John Donne, “never send to know for whom the bell tolls; It tolls for thee.” The other famous quote from that poem is “No man is an island”. I think the mainstreaming of deep-fake video manipulation technology qualifies as an ’emergent occasion’, and the general focus on death and the interconnectedness of human-kind seemed fitting. Whether Deep Fakes or Rendering the Truth, the narratives propagated by the algorithmically generated life-like avatars of commerce, crime and conquest will have ripple effects that have consequences for us all. Thanks for reading.